Application

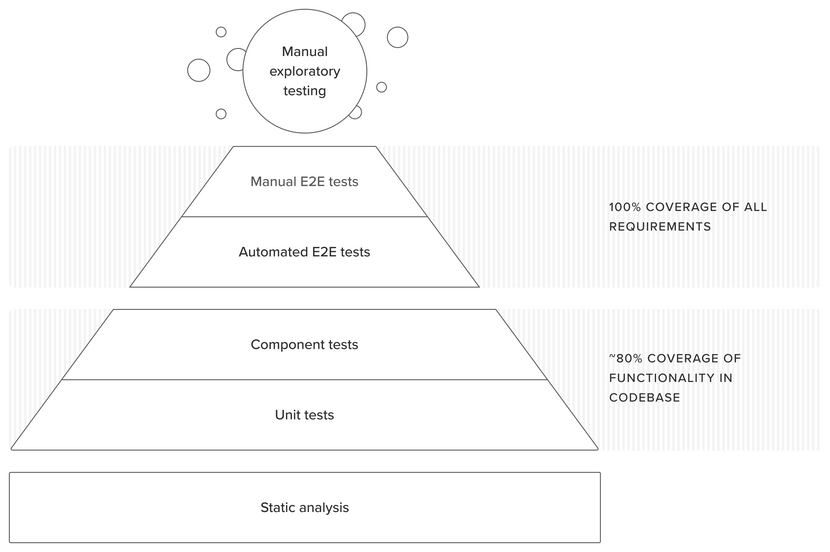

This diagram should give a sense of how we test our products. The size of each step on the pyramid approximately corresponds with the volume of tests within it:

And here’s how this would apply in practice, using the example of a mobile project built on a JavaScript stack in React Native.

Static analysis

Run on every commit:

- Prettier for automated code formatting (via @hannohealth/eslint-config).

- ESLint for linting and conventions, including defensive programming standards and code complexity analysis (also via @hannohealth/eslint-config).

- Flow for static typing of JS files.

Run periodically across the entire codebase:

- Semgrep for security analysis, standards and custom rules.

- Snyk/FOSSA and GitHub Dependabot for analysis of dependencies and environments for potential security and licensing issues.

The frequency of these checks can be increased after the first production release, or even run on every commit if desired.

Unit tests

We write these to test pure functions. They are fast to write and run (when compared to end-to-end tests) and provide immediate feedback when developing.

The depth of testing here will depend on the complexity and risk of the function being tested—we’d write more tests for a state machine implementing a complex clinical procedure than we would for a less critical UI element.

Typically implemented with Jest and react-native-testing-library.

Integration/component tests

These validate the integration of functions into the context of the app (e.g. wrapping a component in a provider or passing props into a component), testing the output to confirm that these are behaving as we would expect.

Also implemented via Jest.

End-to-end (E2E) tests

Automated

These are written to cover product Requirements. Here, we test components and interactions in simulated scenarios within the app, interacting with the UI to confirm that the Requirement is being met.

We favour:

There are fewer of these automated end-to-end tests than there are unit and integration tests, since they run on emulators and are much slower to execute and prone to flakiness.

Manual

These are designed to:

- Address coverage gaps in automated end-to-end tests, ensuring that we maintain 100% coverage of Requirements to satisfy our traceability needs.

- Provide an additional layer of coverage for high-risk functionality.

These are not implemented in the codebase at all, but the test scripts are defined in Jira via Xray. We consider manual tests to be technical debt which will need to be repaid at some point in the future. They are prime candidates for being converted into automated end-to-end tests later on.

Manual exploratory testing

This is the final layer of testing, carried out by our QA testers, often with involvement from clients. It’s a combination of chaos engineering and guerilla usability testing, carried out on the Alpha builds of the product.

It is designed to help us spot issues which exist outside the scripted tests (often indicating a gap in testing that needs to be filled) and to test on a wider variety of devices.

Principles

Maximise coverage

Widespread test coverage allows us to make codebase changes with confidence, reducing the risk of inadvertently introducing bugs:

- For SaMD products, our definition of done for a Requirement is that it is covered by at least one passing end-to-end test.

- Static analysis and other types of tests then provide further, repository-wide, test coverage.

- We leverage protected branches and pre-release checks to make sure that the whole automated test suite is green prior to a PR merge or release generation.

- When a bug is identified, the fix should include a test to catch future regressions where possible.

Minimise flakiness

Flaky tests undermine developer confidence in the test suite, creating a sense of tests working against us, rather than for us. They are also costly, since they eat up developer hours each time they misbehave.

To try and minimise this, tests should be written to be self-contained and robust, minimising reliance on external dependencies wherever possible. While test speed is desirable, reliability should take priority when the two come into conflict.

Be pragmatic

For maximum value, automated end-to-end tests covering a feature should be written alongside the feature itself, rather than being backfilled at the end of the project. But there are pragmatic exceptions to this:

- When we are prototyping a feature and expect it to be significantly reworked (and tested) ahead of a real-world release, we consider it acceptable to merge an initial scaffold of the functionality without full test coverage. This helps us to avoid wasting time writing tests for placeholder functionality that will be discarded later on.

- When the test is expected to be flaky/complex. All projects have finite resources. When we’re building the initial version of a product, there are some scenarios and features which would be particularly resource-intensive to reliably cover via automated testing: e.g. contextual events, such as a notification being received from the cloud when 30 minutes of activity is recorded by a connected wearable device.

In both of the above scenarios:

- Any gaps in testing coverage should be documented at the time the code is written.

- Gaps should be revisited when the feature is finalised. If resources permit, an automated end-to-end test should be introduced.

- If this is not feasible, a manual end-to-end test should instead be defined and included in the Test Plan.

Relying on manual tests is far from ideal, since:

- They do not provide real-time feedback. Unlike with automated tests, we won’t know the status of a manual test until it has been manually executed. Since we don’t execute every manual test in every PR, this makes it harder to make codebase changes with confidence.

- They increase QA workload prior to each release, disincentivising frequent releases.

They are nevertheless a pragmatic compromise, allowing us to ensure all Requirements are covered by tests (meeting the definition of done) while maintaining speed to market and engineering flexibility.

Maximise safety

Some parts of a product are riskier than others. For example, the implementation of an insulin dosing algorithm in a diabetes app probably holds more clinical risk than a screen where gamified badges and achievements are presented.

Our testing approach should reflect this reality—higher risk functionality should be covered by multiple layers of tests. In certain situations, additional manual tests may even be considered as a further safety measure, even if the automated tests ought to be sufficient.

Protect user data

To protect user data and PHI, test data should be representative of production data, but should not be actual production data containing real user information. This reflects the fact that tests will be run outside the secured production environment, on developer machines and the CI server.

Any QA or exploratory testing that needs to be carried out in the production environment should make use of dummy user accounts created for this purpose.

Outputs

Tests and their executions create a large number of outputs. We describe these in more detail in Traceability.